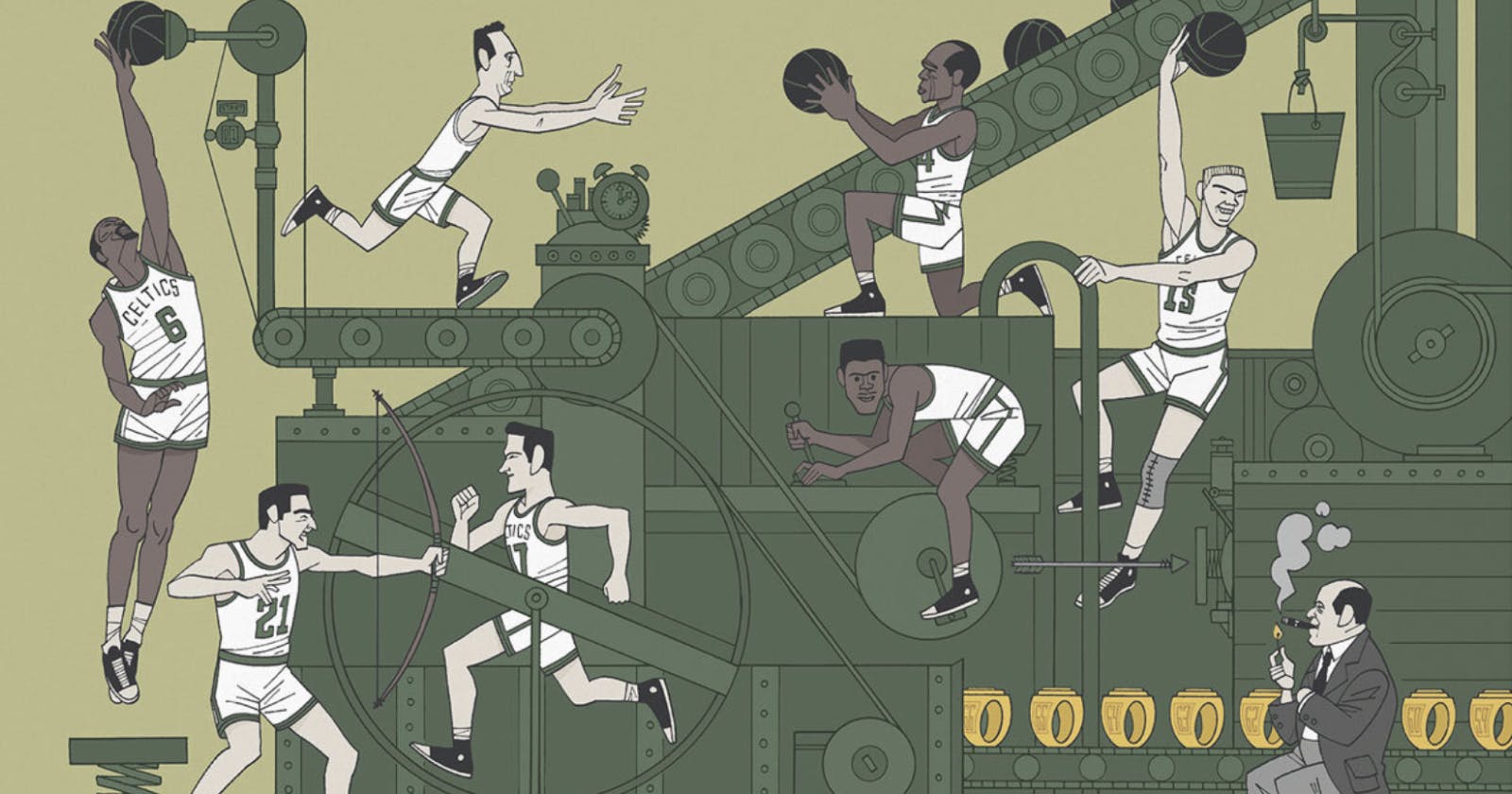

If you’ve seen the movie “Moneyball”, I used to do that for an NBA team. And I don’t do it anymore, because at some point (for reasons I don’t fully understand) I just stopped caring about basketball. But the whole experience changed my life. After years of grades, textbooks, SATs — all this shit that I did at a high level but didn’t really care about — moneyball was the first time since… I don’t know… the age of 12?… that I really turned my brain on. It taught me all sorts of lessons about not just numbers, but how the world works.

Fast forward to today.

There’s been a lot of buzz about measuring developer productivity, especially since McKinsey threw their hat in the ring, which, from what I’ve seen out of the engineer community, was about as well-received as the English occupation in Braveheart.

For a little context, if you’re not familiar with basketball analytics, it has been in full-on Renaissance mode for the past 20 years. In the year 2000, we clapped our hands — ra ra ra, like cavemen — whenever someone put the ball through the hoop. Today, cameras track the movement of every player on the court. Every time someone puts a ball through the hoop, it’s understood to be the culmination of a Markov chain, where everyone, from the man who passed the ball to the man who failed to defend the shot, is awarded fractional credit for the outcome of the play.

I worked for the Charlotte Hornets in the middle of this period (call it the Early Renaissance 👨🎨 ), and I get the sense that developer productivity, as a discipline, is at the beginning of this journey. So I want to share some of the lessons that moneyball taught me. After all, what is moneyball if not the study of workplace productivity?

Work isn’t basketball

There’s just one problem. Work isn’t basketball.

In basketball there are lines on the court. There are rules. There are games with winners and losers.

You might feel like your workplace has lots of rules (maybe you feel oppressed by Jan, the HR lady, and her insistence that you wear pants in the office), but when it comes to being “productive”, there are no rules.

So that’s one problem.

Here’s another problem. Have you ever been in one of those interviews where the interviewer pulls out a pair of calipers and measures your body fat percentage? Or, have you ever worked for one of those bosses who videotapes everything you do, and then screams at you in your 1-1s for not working hard enough? Have you ever chirped right back at that boss, told him straight up to FUCK OFF because everyone on the planet knows that you’re 100x more valuable to the company than he is?

Right. So the NBA has a different set of norms around performance evaluations.

Basketball has rules, and basketball players expect to be measured and analyzed. This means that basketball is easier to analyze, but that’s okay. It’s important to map out the limits of our knowledge. There are still lessons to be learned. I’ll put forward a few observations, which will hopefully inspire some ideas and criticisms of their own.

Lesson 1: Before you look at the numbers, look at the people

Offense is easy to measure. You can see the ball go through the hoop. Defense, on the other hand, is hard to measure.

Because it’s hard to measure, coaches are less certain about who’s good at defense. And because they’re less certain, they won’t pay as much for a good defender. Why would you pay someone a ton of money when you don’t really know what you’re paying for?

Coaches don’t get fired for playing it safe. They get fired for making dumb mistakes.

This might seem obvious, but I think it’s profound. It perfectly captures so many dynamics — personal incentives, uncertainty, the data, how easy it to collect that data.

So this is one of the great lessons of sports analytics — when looking at the numbers, first look at the people. What are their incentives? What data is easily available to them?

There are the obvious examples, like recruiters and resumes — we know that recruiters overvalue buzzwords. But I think most models of developer productivity — whether that’s the way a recruiter reads a resume, or the way a manager conducts performance reviews, or an academic paper on topic — suffer from a deeper problem. These models are all a reflection of us and our problems. It’s our old friend, the American work psychosis, dressed up as “this is what it means to be productive”.

I don’t know exactly why, but we all feel this need to do things all the time. (And I include myself in this cohort.) If we’re not working, we are not “productive”. And if we’re not “productive,” then we’re worthless scum. So we fetishize numbers that show how much we’re doing. Numbers that are, not surprisingly, easy to collect. Lines of code. Pull requests. Deployments. That’s one category of “productivity” models.

There’s another category of models that are (unwittingly) the other side of the same coin. It’s the people who recognize that doing things for the corporation is not the ultimate pursuit in life, but they don’t have a satisfactory alternative, so they turn inwards — are you, the developer, personally satisfied? Are you happy? These models are also based on numbers that are easy to collect — flimsy surveys that don’t hold anyone accountable for real production.

Both of these models miss the point. Just imagine a system for rating basketball players that only measured how many calories they burned. Or how happy they were afterwards, on a scale of 1-10. It is patently absurd.

The only thing that matters in basketball is whether you’re helping the team win, and the only thing that matters in business is whether you’re creating value for other people. Now, this is incredibly hard to do. (I am now, more than ever, aware of this.) It is equally (if not more) difficult to measure. But, if you’re studying developer productivity, this is the battle worth fighting!

(Note: I’m not saying that doing things and personal satisfaction are bad. If you’re missing either… that’s a red flag. But they aren’t the same thing as creating value. They’re just proxies, and, ironically, the more we pay attention to them, the worse proxies they become.)

Lesson 2: Whether you realize it or not, your basic vocabulary is a “model”

There are five traditional positions in basketball:

Point guard

Shooting guard

Small forward

Power forward

Center

LeBron James is labeled a “small forward”, mostly because of his height and body type, but he is normally the player who brings the ball up the court (the traditional responsibility of the point guard), he shoots lots of 3s (shooting guard), and he is a prolific rebounder (center).

So the traditional positions are a bad model, according to the only criteria on which a model should be judged — it doesn’t reflect how the world actually works.

When I was working for the Hornets, we studied this problem, and we concluded that there are somewhere between 12 and 16 positions. Some players do everything, like LeBron, other players specifically stand in the corner and do nothing but shoot 3s.

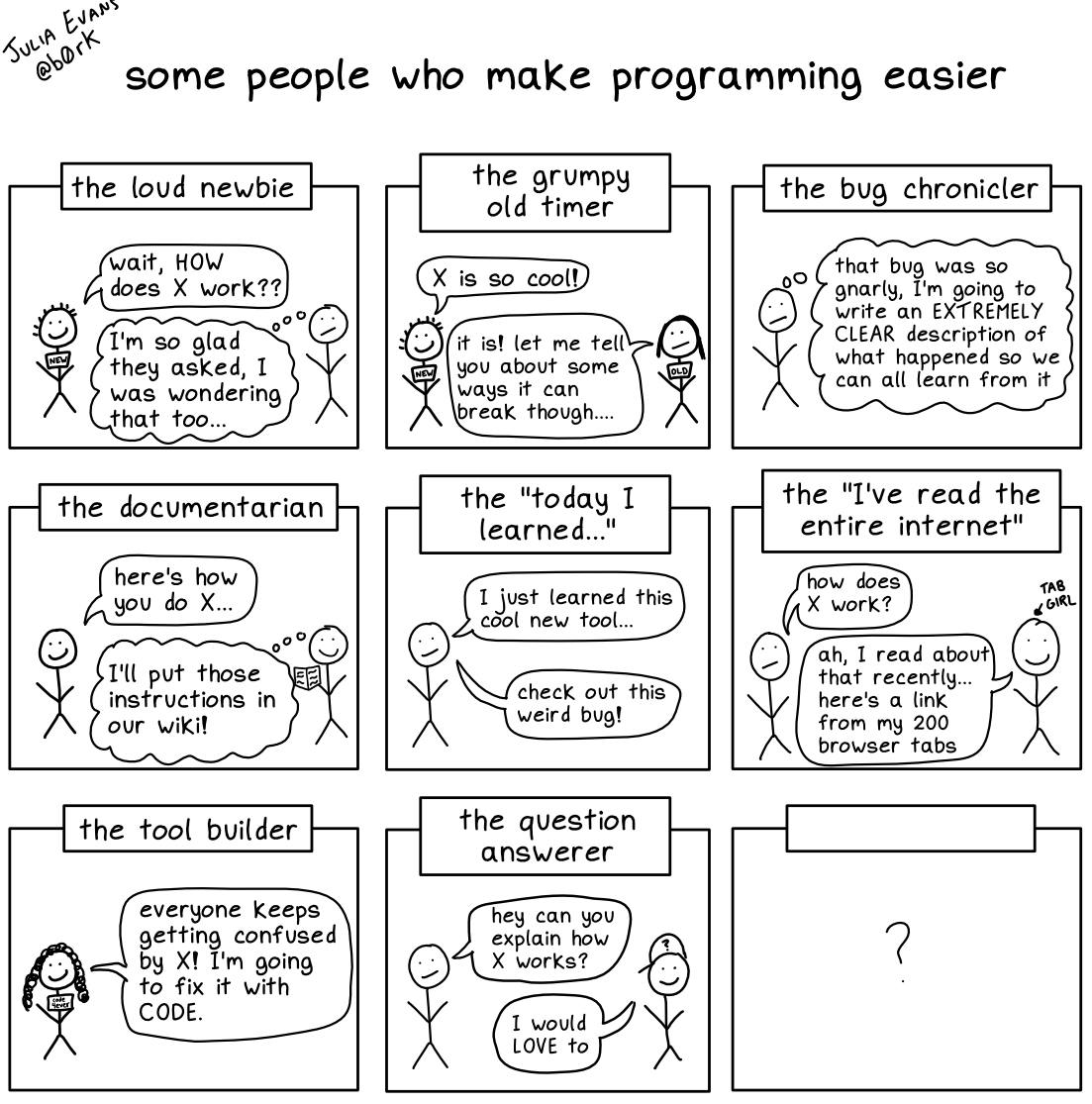

Software engineering has the same problem. We talk in terms of ICs, managers, and senior devs, but those words aren’t good enough. They don’t describe the actual roles that people play on an engineering team.

Compare that to this cartoon from Julia Evans. This is what a good model looks like. I can immediately recognize people from my life in these stick figures.

I can’t tell you what all the “positions” in software engineering are — my experience is too limited — but I can tell you that on the Flank team, the four of us very obviously play different positions. Bryce is a hacker, I’m a designer, Trevor is an editor/producer, and Mallory is the product’s older sister.

If a customer asks me, “Can you build this feature?” I will painstakingly explain the various trade offs and then spend 3 weeks designing an elegant solution. Bryce will say, “Yea no problem!” and have something working an hour later. My code is well-organized. Bryce’s code is spaghetti.

Trevor is a film major, and it shows. He is really good at collaboratively getting to the right solution. He’s amazing at pair programming, especially from the passenger seat.

Mallory made sure that her little brother submitted all his college applications on time, and she does the same thing for our product. She keeps tabs on every feature that every user wants. If Mallory left, we wouldn’t need to hire another engineer, we’d need to hire an older sister. Has there ever been a job posting for that?

We talk about ourselves this way, and it helps us a lot. We know how we fit together as a team. We know who should do what. We know what we’re good at and what we’re bad at. A lot of classic organizational problems simply… go away… because we have the right words.

Zooming out to the department level…

This point about labeling roles — it doesn’t just apply to individuals, it applies to the whole department as well. At some companies the engineers are referred to as “IT”, and they live in third world countries. At other companies they’re the innovative engine, and they’re treated like gods.

There isn’t a great analogy for this in basketball. It’s almost like they’re different sports altogether.

Lesson 3: Don’t be afraid to get your hands dirty

There’s a misconception that scouts watch film and the moneyballers look at numbers. The truth is, to be good at either, you need to do both.

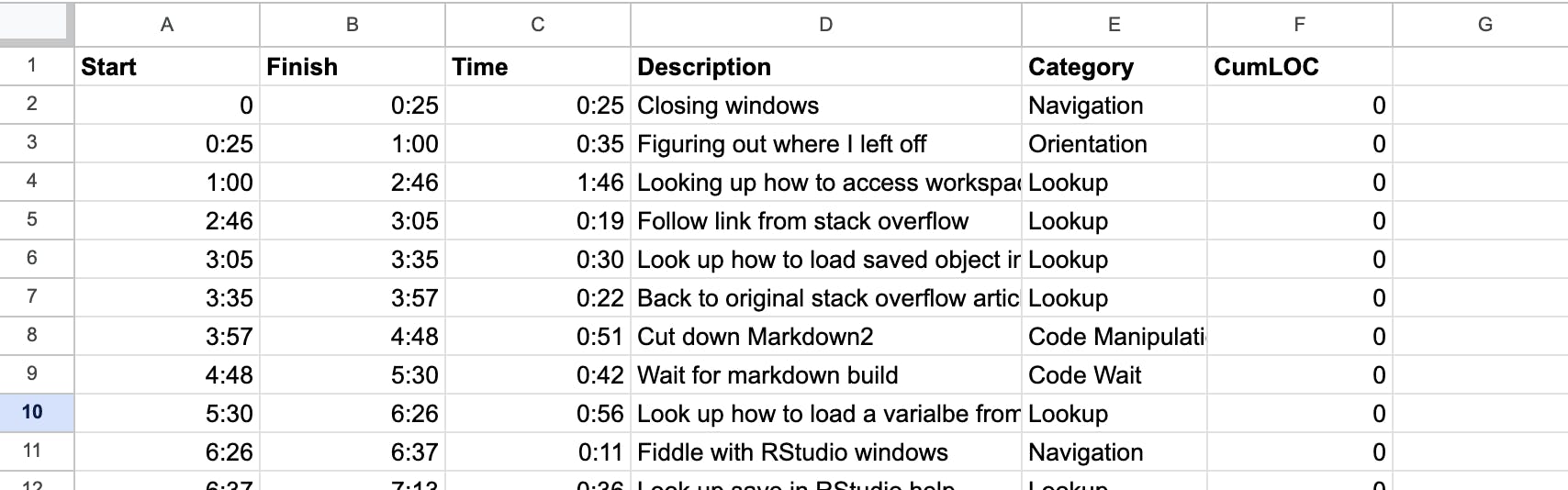

I can’t believe I actually found this spreadsheet, but in 2018 I screen captured myself programming for an hour, and I recorded all the micro-tasks.

There were some amusing learnings. For example, I spent 4 whole minutes randomly clicking browser tabs, like a cracked-out monkey. 🔥 🥄 🐒 Also, in one hour of coding, guess how long I spent actually writing new code? 30 seconds!

The bigger learning is that it is possible to segment tasks into small units of work, even if they only last for 30 seconds. I grouped my tasks into 9 categories:

| Category | Total Time |

| Moving code around, like copy + paste | 21:31 |

| Googling / StackOverflow | 15:10 |

| Testing code / REPL | 6:40 |

| Staring off into space | 5:00 |

| Cracked out monkey | 3:58 |

| Moving windows around | 2:31 |

| Waiting for code to run | 2:18 |

| Remembering where I left off | 0:43 |

| Coding | 0:36 |

These microtasks — they’re almost like plays in basketball. Labeling them is helpful for two reasons:

We can compare one type of “play” against another. For example, clicking around like a cracked-out monkey is obviously a poor use of time. Using multiple monitors and having a little bit of a plan (I normally just write down a few words, or draw a simple diagram) has really helped me tame the monkey. 🦮🐒

We can analyze all the “plays” in a given category. I spent a quarter of my time on StackOverflow. I went back and watched those segments, and it was pretty sad. I would open up an answer in a new tab, skim the whole thing in 5 seconds, not understand any of it, open another tab in frustration, and so on, then get to the point of having 10 tabs open and start going back through old answers, never understanding any of it. Ironically, the solution was in the very first tab that I opened. 🤦♀️ Now, I really try to channel my Julia Evans zen and seek understanding rather frantically searching for the first working implementation.

Lesson 4: You’ll learn more from testing simple models than building fancy ones

A common way to model basketball skill is as a linear combination of sub-skills. Something like this:

| SKILL | SCORE (out of 5) |

| Shooting | 4 |

| Passing | 3 |

| Rebounding | 5 |

| Dribbling | 2 |

| Defense | 5 |

| Overall | 3.8 |

It’s not a terrible model. It’s easier and more reliable to evaluate specific skills than it is to evaluate the whole player. But to learn anything you have to evaluate the model; otherwise, it’s just a masturbatory numbers game. And to evaluate the model, you have to ask yourself, “Does this explain how the game of basketball is played?” A simple way to evaluate this model would be to:

Score every player in the NBA

Add up all the scores by team (weighted by how many minutes each player was on the court)

See if the team-level tallies correlate with wins.

We used to do this as a quick-and-dirty way of evaluating different theories about player value.

With Flank, we used to get together on Mondays and say, “Here’s what I want to get done this week.” Then, Friday would roll around, and we’d look at the list and have a good laugh at how naive we were 5 days earlier. 🤣

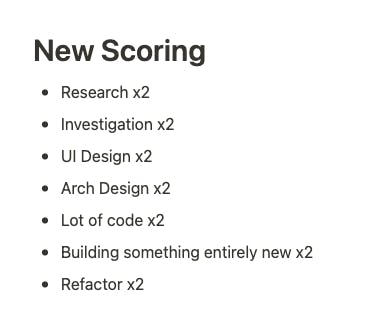

At some point, we decided to come up with a “points system” for estimating how long each task would take. Our first attempt was really simple. We came up with some categories (e.g this involves some UI design) and then, for each task, we’d add up the number of categories. A linear combination, in other words.

This model worked well on average, but when it was wrong, it was really wrong. We’d add up the points and say, “This should take a day”, and then it would take 2 weeks.

We switched from a linear combination to an exponential combination. So, say that a task involved research, UI design, and architecture design. Instead of 1 + 1 + 1 = 3, the new score would be 2 x 2 x 2 = 8.

Interestingly, this system was less accurate on average (it would frequently overestimate tasks) but very accurate in the aggregate.

This is far from a perfect model, but there’s a lesson in here somewhere. Something about the surface area of the problem. As the task touches more areas of the system, it becomes more difficult to estimate.

Lesson 5: Always keep the ultimate goal in the back of your mind

NBA teams shoot a lot more 3s now. This is the real legacy of the “moneyball” movement. And it might seem like an unbecoming legacy for a whole Movement, with conferences and academic papers and fancy new titles. After all, it doesn’t take a genius to deduce that shooting 33% from 3 (1.00) is a higher expected value than shooting 49% from 2 (0.98).

But I think this is how the world works. The biggest gains don’t come from a super complicated model — they come from a simple observation that triggers a paradigm shift. In baseball it was the observation that runs win games (obvious), and that every single thing that a baseball player does on the field can be equated back to runs (not obvious for many years).

In basketball, it’s winning. How do you win? You score more points. How do you score more points? You increase the expected value of each shot. How do you do that? Well, the easiest way is to shoot more three pointers. That sounds obvious, but millions of people (some of them quite smart) watched basketball for DECADES without having this realization.

In business, it’s free cash flow (if you’re an accountant) or delighting customers (if you’re a product person). How do you delight more customers? That seems like an impossibly hard question compared to scoring points in basketball (and certainly outside the engineers’ responsibility). But I think it’s essential to ask the question nonetheless.

As a thought experiment, let’s just start with the question, “How do engineers delight more customers?” Actually, we can make it easier on ourselves by asking the inverse question — “What gets in the way of engineers delighting their customers?”

We’ve all experienced those problems. It’s things like building features to impress investors.

So consider this framework:

Do the engineers use the product themselves?

Does everyone on the team have the same understanding of what they’re building?

Are they inspired by their founder?

Does the manager push back on bad ideas? (from investors or customers)

Can new hires ask dumb questions?

Hmmm. Interesting. That doesn’t look anything like a “productivity” framework. It has more to do with culture than the number of pull requests. But if I were going to buy a piece of software, I’d rather have the answers to these questions than to know how many PRs the team reviews every week.

This is unexpected! But good! Asking the right question is taking us in interesting directions. And this framework reveals an obvious but difficult truth…

Lesson 6: Be honest with yourself, even if it means relinquishing the satisfaction of a nice, clean answer

The framework from the last section reveals an unfortunate truth, which is that leadership productivity is usually more important than worker productivity. That’s unfortunate because it’s not well-understood. It’s not easy to measure.

In basketball, it is really difficult to model the effect of the coach. When a player hits a shot, how do you tease out the effect of a man standing 40 feet away in a suit? The effect is not nearly as big as the Man Who Shot the Ball, but it’s not zero either…

I realize I’m raising questions more than I’m providing answers, but my guess is that existing models (whether it’s an academic paper or the way recruiter scans a resume) massively underweight leadership productivity. That is, they overvalue candidates who worked for good leaders, and they undervalue candidates with leadership skills of their own.

Lesson 7: Try to think about all the outcomes around you as being influenced by some environmental factor

As an NBA team, you always want to know how good you really are (and how good everyone else is too). The simplest way to measure this is by looking at your record. But a team’s record is “noisy” — for example, some teams have to travel more than others, so their record is worse for a reason that’s totally outside their control.

You can imagine a very simple model for this:

basketball peformance = skill +/- exogenous factors

The same thing applies to software engineering. In my last job, I interviewed over 200 data scientists. These were live coding exercises. Now, can you guess the one factor that, above all else, determined how quickly a candidate finished the exercise? I’ll give you a hint — it was not intelligence or experience or GPA… ⏳

It was whether the person used a Jupyter Notebook! (Or RStudio, for the R crowd.) Using a Jupyter notebook sped up the task by roughly 2x.

This insight leads to a simple model for engineering:

individual performance = skill * tooling

This leads to an interesting conclusion: choosing a tool might be the most important determinant of individual productivity! What does that mean for teams of people? Could an exceptional Tool Chooser + 7 junior engineers outperform 8 senior engineers who are not “tool curious”? If you take this question to the logical extreme (the 8 senior engineers do their work on 1960s IBM mainframes), the answer is obviously yes. But how many people think this way when they assemble an engineering team?

Lesson 8: “Unscientific” is scientific / Beware the model!

This scene from “Moneyball” is amazing. This is actually how scouts talk. Like, to a tee.

As a hyper-rational, over-educated dumdum, it’s easy for me to mock the scouts. But the problem isn’t that the scouts are “unscientific” — it’s that the message has to travel from the gut to the mouth, and somewhere along the way, it gets garbled. I’ll tell you how I know this.

With the Hornets, I did a meta analysis of player evaluation models. Initially, it was just a comparison of “analytical” models. But, just for fun, I took all of our scouts’ ratings (those “subjective,” “unscientific” ratings 🤮) and threw them into the analysis. Turns out the scouts’ predictions were better than any of the “analytical” models!

So it’s a beautiful lesson of moneyball really. That the tools of moneyball are frequently abused, to detriment of moneyball. (Lesson 1: First, look at the incentives of the moneyballer ☯️)

Tids and Bits, towards a new model

Understand the rules of the game. Just because an engineer won’t let you videotape him while he does [that thing], it does not mean [that thing] is unimportant and should be dismissed.

If you want to find the blind spots in the numbers, first look at the people. What are their incentives? How are they collecting data? Recruiters will overvalue keywords. Managers will overvalue consistent task completion. EVERYONE overvalues doing things out of some existential dread.

There are many “positions” in software engineering other than “IC” and “manager”, for example “hacker”, “designer” and “older sister”. If you can build up that vocabulary, you’ll have a better idea of who to hire, and your team will communicate better.

Leadership productivity is almost certainly undervalued.

Watch film, and you’ll discover plenty of microtasks that can be labeled

The difficulty of estimating tasks is a function of their “surface area”

Choosing the right tool is an incredibly important skill!

Trust the gut feelings of people with experience, and remember that when you’ve got a model (i.e. a hammer), everything looks like a nail

The Bob Taylor backtest

When people talk about Xerox PARC, they use words like “magical” and “brilliant”. They don’t talk about flow state, or feedback loops, or developer satisfaction, or velocity. And in all these books there’s a bittersweet resignation that PARC can’t be recreated — that it was a once-in-a-lifetime chemical reaction whose very participants don’t even know how to recreate it. But I would argue that is the job of moneyball. 10 years ago, the Golden State Warriors were magical. The way that Steph Curry and Klay Thompson and Draymond Green played together — nobody had ever seen anything like it. Now? Everyone plays like the Warriors. The Sacramento Kings had them on the ropes this year, for crying out loud. So it is possible to recreate the magic.

There’s one particular character from PARC who has always fascinated me, and that’s Bob Taylor. By all accounts, he seems to have been one of the most important people at PARC, if not the most important. But he was also, by all accounts, lacking technical skills, and it sounds like he was kind of an asshole. But, if you believe the Xerox PARC mythos, he’s one of the most “productive” engineers in history. In other words, he’s the Draymond Green of PARC.

So that’s my test. I’d like to see a developer productivity framework that explains how Bob Taylor was so productive. And my guess is that it doesn’t look anything like the current paradigm, and it definitely won’t be in a McKinsey report 😜

Appendix: Back and Forth with Ben Falk

Ben is the creator of Cleaning the Glass. When I first met him, he was working for the Portland Trail Blazers. He’s not only one of the best basketball minds on the planet, he’s also an incredibly welcoming person. When I was looking for a job in the NBA and didn’t have a single credential to my name, he took the time to read through my work, he hopped on the phone with me to discuss it, and he encouraged me to keep pushing forward.

If you've read this far, I have good news and bad news:

Good news: You get to read Ben's summary

Bad news: You could've just skipped 4000 words of Angus and just read Ben's take

Ben

This made me think of a few general themes that you're getting at that I think are pretty interesting.

- I like to say that in basketball we can describe "team productivity" fairly well. We measure it with wins and losses (though we know those aren't perfect!) and have lots of team level data that can help us understand how teams win or where they're falling short. But even here we're not perfect. And so certainly if we're falling short on that level, the challenge of deciding how individual players and coaching combine to produce that team productivity is very challenging!

I feel like that's a lot of what you get at here. First, in the world of software development, we don't even necessarily have a good analog to wins and losses, as you write. So measuring the overall team productivity itself is already a major challenge. And then all the more so it's incredibly challenging to go that one step down and try to figure out how individuals combine into team productivity.

- There's a big trap it feels like people fall into which is to take whatever data is easily available to them and rely on that. In basketball this often happens where box score stats are easy to get so people say "let's make a player value model out of box score stats". In software development, we have very little data, so the temptation is to measure based on what data we have, which, as you point out, is so far from what we actually mean by productivity.

In sports we have the luxury of having a lot of worker "productivity" captured on film and by all kinds of sensors so that we can have a better sense of how to sift through it for meaning, and we're still a long way from accurately measuring production.

So if we can't do it that well in sports, it's a monumental achievement to try to do so in software!

Angus

I didn’t have an underlying thesis in mind, but your reaction is clarifying my overall opinion, which is something like:

It is insanely hard to measure workforce productivity, which you and I know because basketball is basically the floor, and even basketball is exceptionally hard. So we could use some intellectual humility, but intellectual humility to the extreme (“we can’t know anything, what’s the point?”) isn’t useful either… there are useful tidbits waiting to be discovered, even if it doesn't form a complete model of developer productivity.

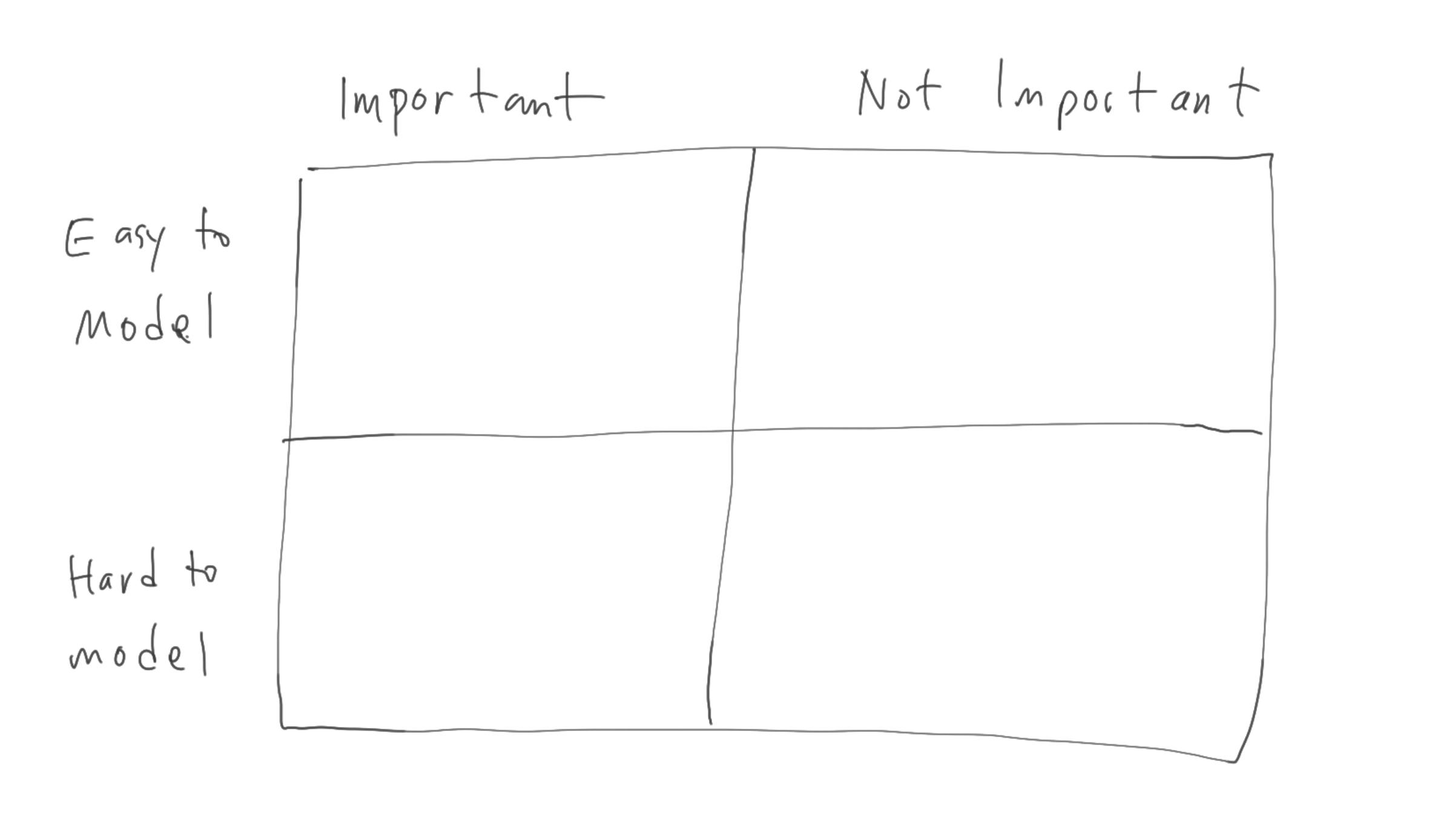

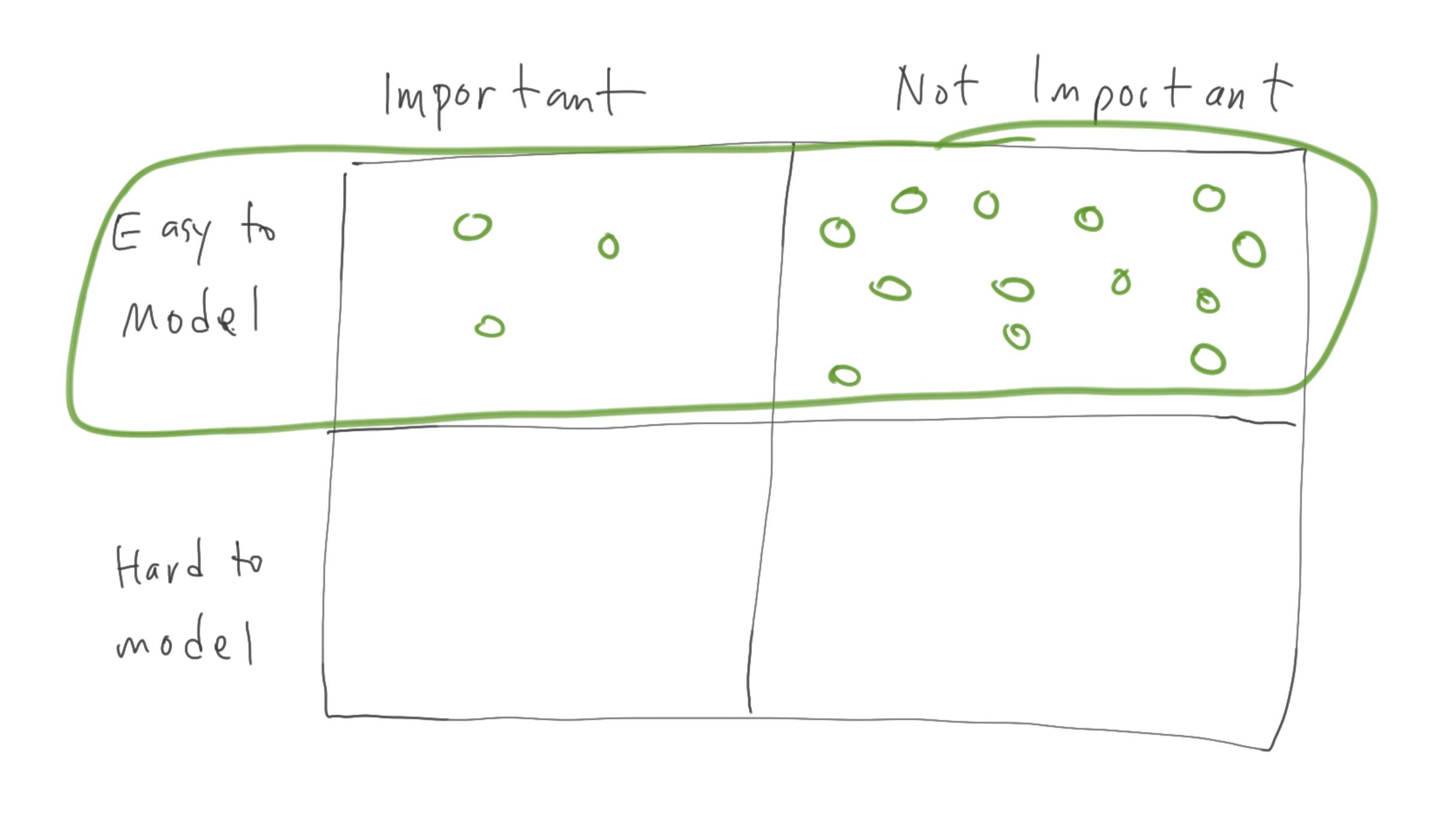

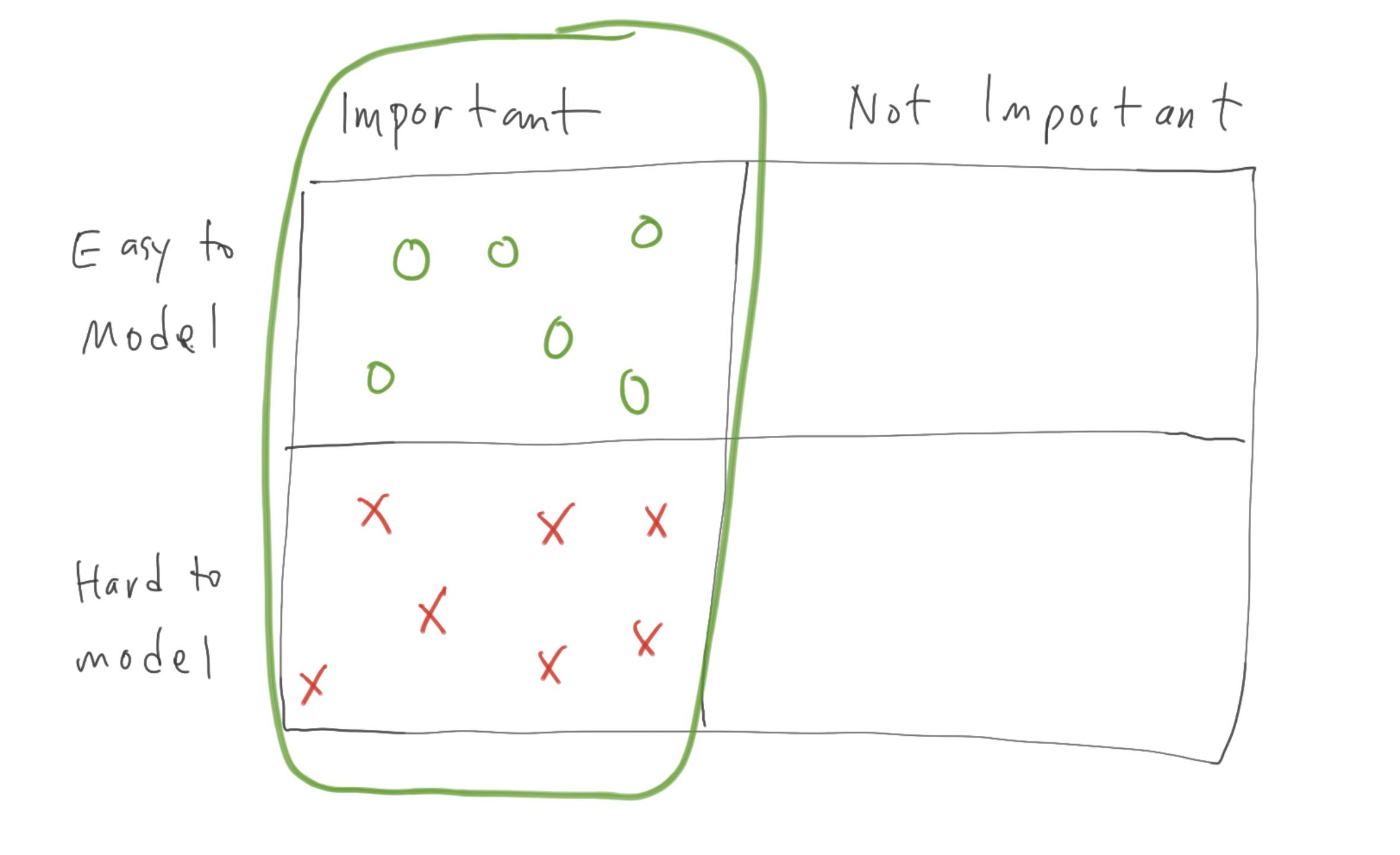

Actually, what do you think about this as a framework.... Two dimensions -- 1) whether it's important, 2) whether it's easy to measure / model

It's human nature to live in the "easy to model" zone, but as a result, you end up spending most of your time on things that aren't actually important.

Instead, if you just focus on what's important, you run into a lot of dead ends (questions that are intractable), but, when you do model, you only model things that actually matter.

Ben

I do agree with you that if someone really wanted to take the time to truly study it, there surely are ways to get closer to the truth. But it would take a lot of work and understanding of underlying dynamics.

As far as your framework: Ah man, that's good...that actually fits something else I've been thinking a lot about lately and is a great framework for that too, which is that often when we try to solve hard problems, we slip back to solving easier to solve symptoms, instead of attacking the root of the problem. So basically just like you wrote, we spend lots of time either trying to solve unimportant problems or solving things that aren't really problems but convince us we're doing something (and often have unintended side effects). And spending time in the "hard to solve + important" zone is very very difficult, and takes a long time, which is why so few people do it.

So yeah, I like that framework a lot.